one branch to rule them all | guided series #2

A lot of us deploy our apps to multiple cloud environments (or soon will). Now that you know where to start, let's scope and implement some of the ideas!

Welcome (back?), dear reader! 🙌🏼

tl;dr: My goal for this short series is very simple: teach you by example. Together, we're going through the full process I follow to solve various problems:

- 🔍 gather and understand requirements

- 🧠 brainstorm solutions

- 🎯 scope

- 👨🏻💻 implement & test (iterate until convergence)

- 🛑 stop (sounds easy? :p)

What are we working on in this series?

In the previous post, I described the first two steps from the list above – we gathered and understood the requirements, and brainstormed various solutions. If you haven't already read this one, it may be a good time to do so as we'll be building on top of it 👇🏼

If you don't have time to read this, it's okay! we'll do a quick recap in a minute.

. ├── ⏮ Recap │ ├── 🎯 Scope │ │ │ ├── 📋 req #1: No-brainer git flow │ ├── 📋 req #2: Application needs to be versioned │ └── 📋 req #3–4: Configurable deployment targets │ └── 👨🏻💻 Implement & test │ ├── Reference point └── Step 1: version the application │ ├── git tagging └── Containerize the application

⏮ Recap

It's been quite a lot of content to digest previously, so what would you say we recap?

Our problem has been defined as follows:

Firstly, based on the analysis of the problem and a tiny bit of additional specification, we've concluded with the following list of requirements:

- Git flow must be a no-brainer – we don't want to deal with multiple branches that will have to be frequently synchronized.

- Application needs to be versioned.

- It must be possible to deploy the required version of the application to the selected deployment environment.

- Every environment runs only one version of the application at the same time.

- [bonus] automate manual processes to a reasonable extent.

With these in mind, we've brainstormed various approaches that could be used to fulfill the requirements.

📋 req #1: repository branching & development strategy:

option #1: git-flow

option #2: pure trunk-based

option #3: permissive trunk-based

📋 req #2: application versioning:

option #1: git tagging

option #2: containers with tagging

📋 req #3: configurable deployment environments:

option #1: config files

option #2: helm charts

option #3: branch-based deployment

🎯 Scope

I call the third step of my problem solving process scoping.

You can treat is as the symbolic conclusion of the design process. When done properly, scoping should let you implement a functional PoC. Keep in mind that the solutions you pick may lead to issues that you haven't predicted. In such cases, it may be necessary to go back and re-scope the options to pick another alternative. And it's okay. Remember–software development is an iterative process!

I'd say that the number of times when it's necessary to go back and forth between the implementation and scoping phases is inversely proportional to the experience you have in the field – the more exposition you get to various problems, the better your designs and predictions, thus the less iteration is necessary.

With that, let's scope, requirement by requirement.

📋 req #1: No-brainer git flow

option #1: git-flow

option #2: pure trunk-based

👉🏼 option #3: permissive trunk-based

The choice is easy: git-flow is too complex for our use case as we don't want to deal with multiple branches that will have to be frequently synchronized. Pure trunk-based development is a little bit too hardcore when it comes to working in slightly larger teams and requires automated CI upfront. Permissive trunk-based approach with short-lived feature branches will be a perfect choice. It strikes great balance between the complexity and efficiency 👌🏼.

If you're interested, you can read about considered options in the previous post.

📋 req #2: Application needs to be versioned

👉🏼 option #1: git tagging

👉🏼 option #2: containers with tagging

Didn't expect that one! To be honest, the main solution that I'm going to pick is option #2. As mentioned in the brainstorming step, introducing containerization to your project has a lot of benefits and its worth every minute invested in. It will allow the application to be versioned conveniently, plus the execution environment will be clearly defined. Win-win.

However, we'll also introduce git tagging.

Why?

To make it easier to check out the code to specific version of the application to play/analyze/debug it. It's much easier and faster to check out to a specific git tag than to spin up a container. Plus, application containers are (at least should) be minimal, meaning that no additional tools for testing/debugging should be included within them. This is what makes it more challenging to debug the code from within them, hence local check out may be handy in many situations.

Read more about the options here.

📋 req #3-4: Configurable deployment targets

👉🏼 option #1: config files

👉🏼 option #2: helm charts

👉🏼 option #3: branch-based deployment

Firstly, let's go with the easy one to REJECT: helm charts. As part of this tutorial, we're not going to build anything that will be deployed to Kubernetes, hence the decision. Nonetheless, the proposed solution will be Kubernetes-compatible to a certain degree.

Secondly, branch-based deployment. Our selected branching strategy is "permissive trunk-based development". We'll have one main branch, and allow for several short-lived feature branches. That's all. Branch-based deployment strategy would require long-lived branches other than the main itself (e.g. develop, etc.). There are no such branches allowed, so branch-based deployment goes to trash.

What's left is the approach leveraging config files. It's simple, versatile, and effective, and will fit our design perfectly.

Details on all the options are here.

Uff, scoping done! 😅

Now, the only thing left is to implement and test all of that. Easy-peasy [sarcasm]

👨🏻💻 Implement & test

I've divided this phase into incremental steps so that it's easier for you to see gradual improvements introduced to the code and the project overall, and how to fulfill certain requirements. The code is available on GitHub, check it out here. For every implementation milestone, I've prepared a dedicated tag in the repository so that it's easier for you to either checkout the code and play with it and, of course, fork it if that's what you'd like to do.

Reference point

Let's start with the reference point of the project. Go to GitHub's UI or run the command below on your machine to checkout the code:

git checkout 1-reference-point

This is the state of the repository you've been given. We'll take it from here. It contains some basic stuff like:

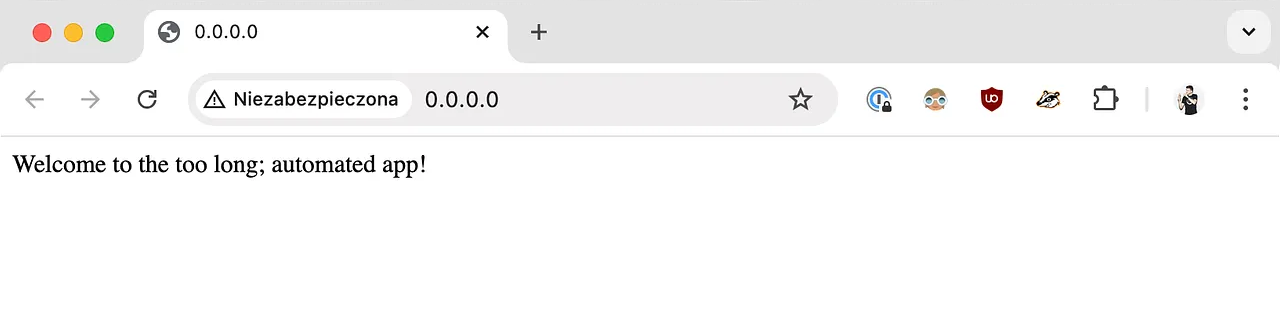

- simple flask server displaying welcome message (app/src directory)

- smoke unit test and a placeholder for integration tests (app/test/unit and app/test/integration dirs)

- starter README file

Ensure docker and Python are installed in your development environment, then install the requirements, and continue to either run the tests or the application locally. Refer to the README for details on how to do that.

Once you run the application locally, you should see the following screen 👇🏼

Step 1: version the application

Recap: we'll use git tags and docker image tags to keep our application versioned. Let's start with the tags as it's easy to do.

But first things first.

We need a file that will be used to log all the changes introduced to our app. The file will be called…

🥁🥁🥁

💥 CHANGELOG.md 💥

I'm a big fan of semantic versioning scheme. Sounds mysterious, but I bet I bet you've already seen this approach in action multiple times:

<MAJOR>.<MINOR>.<PATCH> # e.g. "1.4.2"

What are these "major", "minor", and "patch"? Here's an excerpt from the docs:

- MAJOR version when you make incompatible API changes

- MINOR version when you add functionality in a backward compatible manner

- PATCH version when you make backward compatible bug fixes

That's the approach we're going to follow in our change log.

Now the important thing: when should you update the version of the application and introduce new entry in the change log?

Whenever you add/remove/change any file strictly related to the application.

This can be: source code, requirements, static files that are used by the application from within the container, this sort of things. It may be a bit hard to grasp in the beginning, but you'll quickly learn to judge properly, I promise. Plus, that's why there are other developers that will be reviewing your code to help build these intuitions 🙌🏼

git tagging

As mentioned before, you can think of git tags as "named commits" – you can reference a specific point in repository's git history using a human-friendly string, not commit's SHA. At this point of implementation, we're going to just define the rules for creating new git tags, not to automate it in any way. We'll start with the following rule:

To make it crystal clear: you decide that the app will have version 1.0.1 because there was one small backward-compatible bug that you fixed. What you should do:

- Create new feature branch from the main branch.

- Fix the bug in the application and put new entry in the CHANGELOG.md file, briefly describing the change.

- Open PR to the main branch.

- Once approved, merge the code.

- Checkout main locally and pull the changes.

- Create a tag with the following command:

git tag 1.0.1 - Push the tag to the remote:

git push --tags

Containerize the application

Now is the perfect time to containerize our application. How?

- wrap your app with Docker into a docker image

- build the image locally with a minimal, yet functional set of files required by the app to run properly

- [optional] set up a docker image registry on Docker Hub

- push the image to the registry

To make app containerization as straightforward as possible, it is useful to first organize it into "a container-friendly" structure:

app/

├── src/

├── test/

│ ├── integration/

│ └── unit/

├── CHANGELOG.md

├── requirements-test.txt

└── requirements.txt

I think it's pretty self-explanatory, so let's continue.

We'll add two files to this mix under the app directory: Dockerfile and .dockerignore. Since our application is a super simple Flask server, Dockerfile will be rather straightforward:

FROM python:3.10-slim

EXPOSE 80

ENV FLASK_APP=main.py

COPY . /app

WORKDIR /app/src

RUN pip install --no-cache-dir -r /app/requirements.txt

CMD ["gunicorn", "-w", "4", "-b", "0.0.0.0:80", "main:app"]

And the corresponding .dockerignore (to ensure only the files that are essential for the server to run properly inside a container are copied to the image during the build procedure):

**/__pycache__/

.pytest_cache/

test/

requirements-test.txt

*.md

.dockerignore

Dockerfile

Note that test requirements are NOT put into the app container as they're not needed there for the app to run correctly. If required, you may of course create a separate Dockerfile that would set up the container for testing purposes. I can imagine at least several use cases that would benefit from doing it, e.g. automating unit and/or integration tests execution and running them as Docker containers.

Once added Docker-related files, app's directory should now look like this:

app/

├── src/

├── test/

│ ├── integration/

│ └── unit/

├── .dockerignore

├── CHANGELOG.md

├── Dockerfile

├── requirements-test.txt

└── requirements.txt

Believe me or not, but this will be enough to build Docker image with our application and publish it to the docker image registry.

Run the following to build a docker image locally:

cd app

docker build -t tutorial-1 .

The image will be called tutorial-1. To run it, use the following command:

docker run --rm -p 80:80 tutorial-1

Note that the directory from which you are running the container is NOT important in this case. Docker container runs a Flask server inside, exposed to port 80 of the container. To make it available runs a Flask server inside, exposed to port 80 of the container. To make it available outside of the container, we need to forward this port to some other port outside of the container. That's what the -p flag does – it forwards container's port number 80 to port 80 of the local machine.

Once built, the image can be pushed to an external registry (a place on the Internet where your Docker images are stored). For this tutorial, I've prepared a helper Docker Hub repository. It's public, meaning, you can pull the images from it without any limitations, however…

Okay, back to the gist.

Where were we… ah, yes, building and pushing Docker images.

To push the image, you need to first authenticate to your image registry of choice. In my case it's the Docker Hub, so I simply need to run:

docker login

Next, let's adjust the tags of the image we've already built before (tutorial-1):

docker tag tutorial-1 toolongautomated/tutorial-1:1.0.0

Then, to push the built image:

docker push toolongautomated/tutorial-1:1.0.0

This concludes step 1 of our implementation efforts. We now have a clear git tagging process defined, and the app is containerized. I encourage you to spend some time with the code and read the README until you get comfortable with what you see there.

Next on the agenda, final piece of the baseline requirements: configuration of multiple deployment targets. We'll talk about it in the next article.

I hope you enjoyed this article and learned something new. If you have any thoughts or suggestions, please feel free to reach out to me either via email or via X.

See you in the next one! 👋🏼